During five days in January 2020, a group of about 50 researchers from IT and Computer Science from a wide range of universities extensively cooperated on challenging problems proposed by the industry.

Computer Readable Text

The ICT with Industry workshop (#ictwi) was facilitated by the Lorentz Centre in Leiden, and brought together scientists, in particular (junior) research staff and PhD students, and professionals from industry and government. The workshop revolved around a number of case studies, which were subject to an intense week of analysing, discussing, and modelling solutions.

The group at the KNAW Humanities Cluster was approached by colleagues at the Dutch National Library (KB) research lab. They immediately teamed up with University Groningen professor Lambert Schomaker who is expert in artificial intelligence and cognitive engineering.

The challenge is one that we regularly face in the construction of digital infrastructure in humanities and social science research, as well as in the digital cultural heritage sector. There are over 100 million pages of books, newspapers, magazines and other texts in Dutch currently digitised. Many of which were printed before 1800. Approximately 550.000 books in this collection were digitised by the KB in partnership with Google and are now available for research via KB Dataservices.

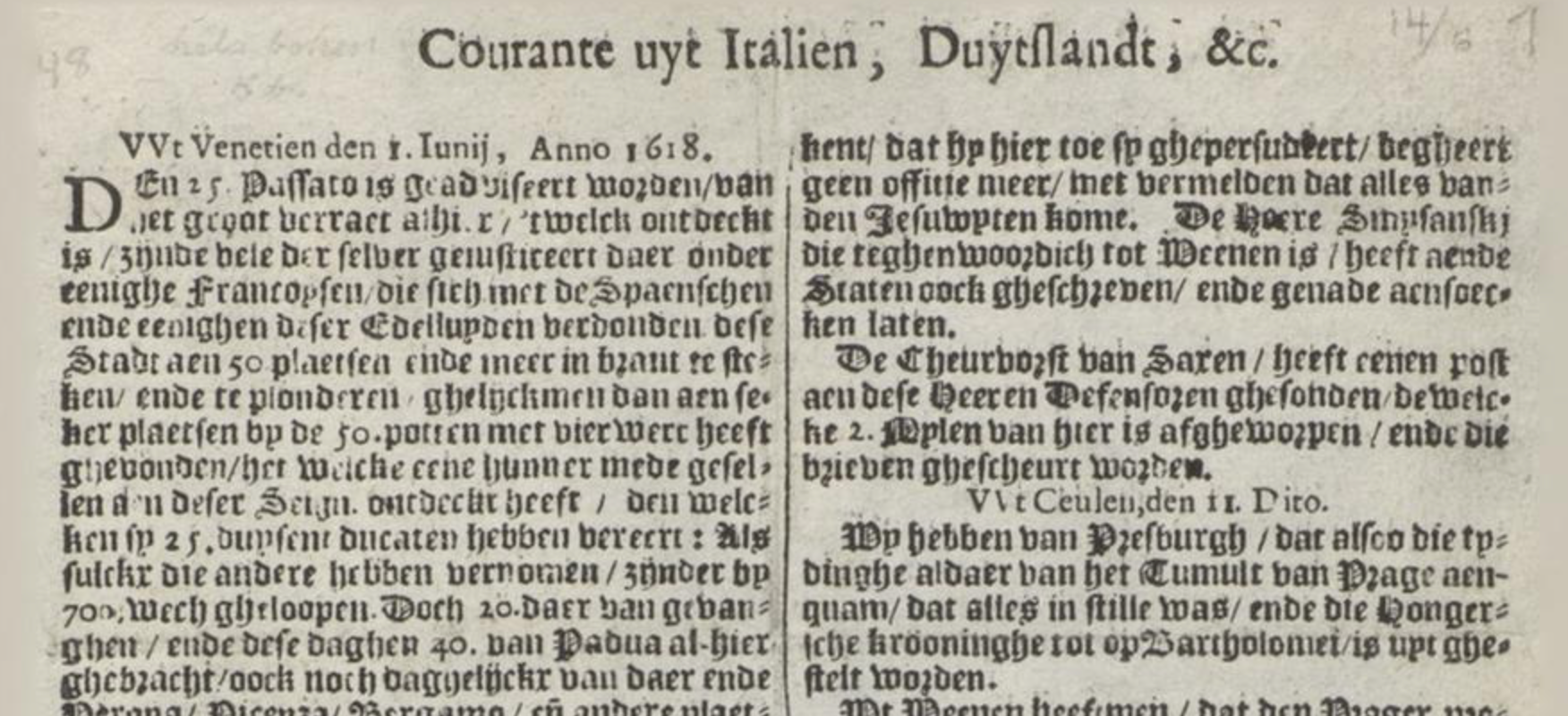

Unfortunately, the computer readable text resulting from this digitisation is often of poor quality. This is due in large part to the use of the so-called ‘gothic’ or blackletter typefaces widely employed by printers between the 15th and 18th centuries as well as the physical deterioration of the paper itself. Current OCR (optical character recognition) software, such as ABBYY, which is used to transform bitmapped images of letters to machine readable glyphs does not achieve the accuracy levels required for digital scholarship on historical texts. The task is made more difficult due to the large variety of Dutch gothic typefaces in use in the early modern period.

The specialist team worked on the improvement of OCR (post-processing) techniques for early modern texts which were printed (either fully or partially) in a variety of gothic scripts. Next to the type-related issues they also addressed complex layout problems as well as bleed-through of text from opposite pages, multiple and historic languages, and crooked or warped lines caused by the digitisation process.

Niche Problems?

This may seem like a niche problem, but there are opportunities here. Solving the complicating issues around digitisation improves the quality of consumer OCR, benefits the improvement of handwritten text recognition, and our understanding of artificial intelligence, cognitive engineering and human-computer interaction. Moreover, expertise in this field is a crucial step in understanding our heritage. The European Union has made digital cultural heritage a key field in its framework programme for research and technical development. People have now unprecedented opportunities to access cultural material, while libraries, archives, universities and research institutions can reach out to broader audiences, engage new users and develop creative and accessible content for tourism, leisure, and education. In short: there is public interest and funding for technical innovation in heritage.

As a public entrepreneur working within academia, I love these one-week workshops. It allows people to team up with commercial and public partners, test new ideas, and lay the foundation for future cooperations that may result in new sources of funding. It breaks the boundaries of traditional fields and disciplines. And best of all: it allows you to break stuff.